Note

You can view & download the original notebook on Github.

Or, click here to run these notebooks on Coiled with access to Dask clusters.

Running on a cluster¶

We’ll use a Dask cluster in the cloud—in this case, using Coiled—to use many machines to process the data in parallel. We can also run in the data center where the data is stored for better performance.

If you use Coiled (which is both easy to use, and currently free!), you can set software="gjoseph92/stackstac" to get a software environment where the latest version of stackstac is already installed.

[1]:

import coiled

import distributed

cluster = coiled.Cluster(

name="stackstac",

software="gjoseph92/stackstac",

backend_options={"region": "us-west-2"},

)

client = distributed.Client(cluster)

client

Using existing cluster: stackstac

[1]:

Client

|

Cluster

|

[2]:

import stackstac

import satsearch

from rasterio.enums import Resampling

Search for a full year of Sentinel-2 data over Santa Fe, New Mexico.¶

We’ll look at 2019-2020.

[3]:

%%time

items = satsearch.Search(

url="https://earth-search.aws.element84.com/v0",

intersects=dict(type="Point", coordinates=[-106, 35.7]),

collections=["sentinel-s2-l2a-cogs"],

datetime="2019-01-01/2020-01-01"

).items()

len(items)

CPU times: user 84.8 ms, sys: 20.7 ms, total: 106 ms

Wall time: 3.09 s

[3]:

294

Set a coarser resolution to speed up the computation¶

We’ll have stackstac retrieve the data at 100m resolution, instead of its native 10m. Since the data is stored in Cloud-Optimized GeoTIFFs with internal overviews, fetching lower-resolution data is very efficient and requires processing an order of magnitude less data.

(Internally, stackstac is just telling rasterio/GDAL to build a VRT at this resolution. GDAL then automatically figures out which overview level to fetch data from.)

We also set bounds_latlon to just the area we want to look at (additionally, this drops any items that don’t intersect that bounding box), and set the resampling method to bilinear to produce a nicer-looking image.

[4]:

%%time

stack = stackstac.stack(

items,

resolution=100,

bounds_latlon=[-106.2, 35.6, -105.6, 36],

resampling=Resampling.bilinear

)

CPU times: user 205 ms, sys: 10.6 ms, total: 216 ms

Wall time: 215 ms

[5]:

stack

[5]:

<xarray.DataArray 'stackstac-ae169f4201b4c70e2f19d70d036371ff' (time: 294, band: 17, y: 450, x: 547)>

dask.array<fetch_raster_window, shape=(294, 17, 450, 547), dtype=float64, chunksize=(1, 1, 450, 547), chunktype=numpy.ndarray>

Coordinates: (12/23)

* time (time) datetime64[ns] 2019-01-02T18:04:01 ......

id (time) <U24 'S2A_13SDV_20190102_0_L2A' ... 'S...

* band (band) <U8 'overview' 'visual' ... 'WVP' 'SCL'

* x (x) float64 3.914e+05 3.914e+05 ... 4.46e+05

* y (y) float64 3.985e+06 3.985e+06 ... 3.94e+06

sentinel:sequence (time) object None None None ... '0' '0' '0'

... ...

sentinel:product_id (time) <U60 'S2A_MSIL2A_20190102T175731_N0211...

proj:epsg int64 32613

view:off_nadir int64 0

sentinel:latitude_band <U1 'S'

created (time) <U24 '2020-09-23T19:20:47.956Z' ... '2...

title (band) object None ... 'Scene Classification ...

Attributes:

spec: RasterSpec(epsg=32613, bounds=(391300, 3939700, 446000, 3984...

crs: epsg:32613

transform: | 100.00, 0.00, 391300.00|\n| 0.00,-100.00, 3984700.00|\n| 0...

resolution: 100- time: 294

- band: 17

- y: 450

- x: 547

- dask.array<chunksize=(1, 1, 450, 547), meta=np.ndarray>

Array Chunk Bytes 9.84 GB 1.97 MB Shape (294, 17, 450, 547) (1, 1, 450, 547) Count 14995 Tasks 4998 Chunks Type float64 numpy.ndarray 294 1 547 450 17 - time(time)datetime64[ns]2019-01-02T18:04:01 ... 2019-12-...

array(['2019-01-02T18:04:01.000000000', '2019-01-02T18:04:05.000000000', '2019-01-04T17:54:09.000000000', ..., '2019-12-28T18:04:05.000000000', '2019-12-30T17:54:06.000000000', '2019-12-30T17:54:09.000000000'], dtype='datetime64[ns]') - id(time)<U24'S2A_13SDV_20190102_0_L2A' ... '...

array(['S2A_13SDV_20190102_0_L2A', 'S2A_13SCV_20190102_0_L2A', 'S2B_13SDV_20190104_0_L2A', 'S2B_13SCV_20190104_0_L2A', 'S2B_13SDV_20190107_0_L2A', 'S2B_13SCV_20190107_0_L2A', 'S2A_13SDV_20190109_0_L2A', 'S2A_13SCV_20190109_0_L2A', 'S2A_13SDV_20190112_0_L2A', 'S2A_13SCV_20190112_0_L2A', 'S2B_13SDV_20190114_0_L2A', 'S2B_13SCV_20190114_0_L2A', 'S2B_13SDV_20190117_0_L2A', 'S2B_13SCV_20190117_0_L2A', 'S2A_13SDV_20190119_0_L2A', 'S2A_13SCV_20190119_0_L2A', 'S2A_13SDV_20190122_0_L2A', 'S2A_13SCV_20190122_0_L2A', 'S2B_13SDV_20190124_0_L2A', 'S2B_13SCV_20190124_0_L2A', 'S2B_13SDV_20190127_0_L2A', 'S2B_13SCV_20190127_0_L2A', 'S2A_13SDV_20190129_0_L2A', 'S2A_13SCV_20190129_0_L2A', 'S2A_13SDV_20190201_0_L2A', 'S2A_13SCV_20190201_0_L2A', 'S2B_13SDV_20190203_0_L2A', 'S2B_13SCV_20190203_0_L2A', 'S2B_13SDV_20190206_0_L2A', 'S2B_13SCV_20190206_0_L2A', 'S2A_13SDV_20190208_0_L2A', 'S2A_13SCV_20190208_0_L2A', 'S2A_13SDV_20190211_0_L2A', 'S2A_13SCV_20190211_0_L2A', 'S2B_13SDV_20190213_0_L2A', 'S2B_13SCV_20190213_0_L2A', 'S2B_13SDV_20190216_0_L2A', 'S2B_13SCV_20190216_0_L2A', 'S2A_13SDV_20190218_0_L2A', 'S2A_13SCV_20190218_0_L2A', ... 'S2A_13SDV_20191115_0_L2A', 'S2A_13SCV_20191115_0_L2A', 'S2A_13SDV_20191118_0_L2A', 'S2A_13SCV_20191118_0_L2A', 'S2B_13SDV_20191120_0_L2A', 'S2B_13SCV_20191120_0_L2A', 'S2B_13SDV_20191123_0_L2A', 'S2B_13SCV_20191123_0_L2A', 'S2A_13SDV_20191125_0_L2A', 'S2A_13SCV_20191125_0_L2A', 'S2A_13SDV_20191128_0_L2A', 'S2A_13SCV_20191128_0_L2A', 'S2B_13SDV_20191130_0_L2A', 'S2B_13SCV_20191130_0_L2A', 'S2B_13SDV_20191203_0_L2A', 'S2B_13SCV_20191203_0_L2A', 'S2A_13SDV_20191205_0_L2A', 'S2A_13SCV_20191205_0_L2A', 'S2A_13SDV_20191208_0_L2A', 'S2A_13SCV_20191208_0_L2A', 'S2B_13SDV_20191210_0_L2A', 'S2B_13SCV_20191210_0_L2A', 'S2B_13SDV_20191213_0_L2A', 'S2B_13SCV_20191213_0_L2A', 'S2A_13SDV_20191215_0_L2A', 'S2A_13SCV_20191215_0_L2A', 'S2A_13SDV_20191218_0_L2A', 'S2A_13SCV_20191218_0_L2A', 'S2B_13SDV_20191220_0_L2A', 'S2B_13SCV_20191220_0_L2A', 'S2B_13SDV_20191223_0_L2A', 'S2B_13SCV_20191223_0_L2A', 'S2A_13SDV_20191225_0_L2A', 'S2A_13SCV_20191225_0_L2A', 'S2A_13SDV_20191228_0_L2A', 'S2A_13SCV_20191228_0_L2A', 'S2B_13SDV_20191230_0_L2A', 'S2B_13SCV_20191230_0_L2A'], dtype='<U24') - band(band)<U8'overview' 'visual' ... 'WVP' 'SCL'

array(['overview', 'visual', 'B01', 'B02', 'B03', 'B04', 'B05', 'B06', 'B07', 'B08', 'B8A', 'B09', 'B11', 'B12', 'AOT', 'WVP', 'SCL'], dtype='<U8') - x(x)float643.914e+05 3.914e+05 ... 4.46e+05

array([391350., 391450., 391550., ..., 445750., 445850., 445950.])

- y(y)float643.985e+06 3.985e+06 ... 3.94e+06

array([3984750., 3984650., 3984550., ..., 3940050., 3939950., 3939850.])

- sentinel:sequence(time)objectNone None None None ... '0' '0' '0'

array([None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, '0', '1', '0', '1', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '1', '1', '0', '0', '1', '1', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0', '0'], dtype=object) - updated(time)<U24'2020-09-23T19:20:47.956Z' ... '...

array(['2020-09-23T19:20:47.956Z', '2020-08-29T22:49:58.271Z', '2020-09-30T04:29:25.703Z', '2020-08-28T15:51:16.696Z', '2020-08-28T15:47:21.917Z', '2020-09-30T00:57:19.775Z', '2020-08-28T22:41:28.482Z', '2020-08-28T15:54:37.441Z', '2020-08-28T22:25:47.607Z', '2020-08-28T22:20:34.703Z', '2020-08-28T22:41:33.408Z', '2020-08-28T16:02:39.206Z', '2020-08-29T01:39:49.008Z', '2020-09-21T03:41:08.809Z', '2020-08-28T22:36:44.237Z', '2020-08-28T15:46:04.505Z', '2020-08-28T18:14:43.585Z', '2020-09-20T06:17:23.277Z', '2020-09-23T18:01:56.477Z', '2020-09-21T02:53:08.266Z', '2020-08-28T22:20:58.662Z', '2020-08-28T22:27:49.824Z', '2020-08-28T22:37:36.088Z', '2020-09-23T21:59:09.711Z', '2020-08-29T22:44:09.569Z', '2020-08-28T22:22:28.814Z', '2020-08-29T22:52:33.361Z', '2020-08-28T16:01:00.663Z', '2020-08-28T22:33:52.096Z', '2020-09-25T06:56:05.174Z', '2020-09-21T03:43:28.681Z', '2020-08-28T15:52:25.407Z', '2020-09-05T19:32:20.939Z', '2020-08-28T22:37:56.081Z', '2020-08-28T22:41:36.103Z', '2020-08-28T17:59:47.438Z', '2020-09-23T23:14:40.777Z', '2020-09-30T04:07:01.355Z', '2020-08-28T22:28:24.927Z', '2020-09-05T21:07:20.323Z', ... '2020-08-23T09:32:30.129Z', '2020-09-23T08:31:47.754Z', '2020-08-23T09:56:16.429Z', '2020-08-23T09:44:55.984Z', '2020-08-23T10:19:11.765Z', '2020-09-25T01:34:43.155Z', '2020-08-23T09:51:19.874Z', '2020-09-05T08:43:42.293Z', '2020-08-23T10:07:14.122Z', '2020-09-05T07:18:33.301Z', '2020-08-23T09:42:04.153Z', '2020-08-23T10:10:08.896Z', '2020-08-23T09:37:33.712Z', '2020-08-23T10:15:36.482Z', '2020-08-23T11:54:03.391Z', '2020-09-20T09:49:13.884Z', '2020-09-30T02:11:04.018Z', '2020-08-23T09:26:36.711Z', '2020-08-23T16:14:41.389Z', '2020-09-21T08:04:29.332Z', '2020-08-24T01:51:23.045Z', '2020-08-23T09:41:30.566Z', '2020-08-23T09:46:13.378Z', '2020-08-23T10:12:12.715Z', '2020-09-23T21:57:49.930Z', '2020-09-23T21:00:27.707Z', '2020-08-23T09:47:51.325Z', '2020-08-23T09:40:18.987Z', '2020-08-23T09:37:22.832Z', '2020-08-23T16:14:07.097Z', '2020-09-30T03:57:53.486Z', '2020-09-20T06:48:26.400Z', '2020-08-23T10:09:49.754Z', '2020-08-23T09:41:55.824Z', '2020-08-23T09:45:54.571Z', '2020-09-21T12:58:16.531Z', '2020-09-23T22:02:28.983Z', '2020-09-23T20:40:56.213Z'], dtype='<U24') - constellation()<U10'sentinel-2'

array('sentinel-2', dtype='<U10') - sentinel:data_coverage(time)object33.92 100 100 ... 100 100 42.39

array([33.92, 100, 100, 42.87, 23.52, 93.58, 100, 42.72, 33.6, 100, 100, 43.03, 33.65, 100, 100, 42.67, 28.63, 62.04, 100, 43.06, 33.56, 100, 100, 42.82, 33.66, 100, 100, 42.86, 33.66, 100, 100, 42.82, 33.57, 100, 100, 42.85, 33.86, 100, 100, 42.65, 100, 33.68, 33.92, 100, 100, 42.6, 100, 33.91, 100, 42.49, 33.93, 100, 41.95, 100, 34.4, 100, 100, 42.05, 100, 34.4, 42.22, 100, 34.05, 100, 100, 42.55, 33.94, 100, 100, 42.52, 33.84, 100, 100, 42.85, 100, 33.55, 100, 42.77, 33.62, 100, 100, 43.2, 100, 33.28, 100, 42.87, 33.47, 100, 100, 43.55, 32.89, 100, 100, 42.79, 33.38, 100, 100, 43.57, 32.62, 32.62, 100, 100, 100, 42.78, 33.45, 100, 100, 43.59, 32.77, 100, 100, 42.52, 33.62, 100, 100, 43.6, 32.85, 100, 100, 42.22, 34.05, 100, 100, 43.27, 33.09, 100, 100, 42.11, 33.92, 100, 100, 42.86, 33.31, 100, 100, 42.56, 33.46, 100, 100, 43.28, 32.97, 100, 100, 42.9, 33.18, 100, 100, 43.41, 32.81, 100, 100, 43.18, 32.94, 100, 100, 43.46, 32.78, 100, 100, 43.31, 32.81, 100, 100, 43.52, 32.77, 100, 56.06, None, 61.48, 34.48, 32.79, 100, 84.07, 42.14, 32.86, 100, 100, 43.4, 32.8, 100, 100, 43.34, 33, 100, 100, 43.3, None, 97.18, 100, 43.23, 33.14, 100, 100, 43.2, 32.99, 100, 100, 42.97, 33.42, 100, 100, 43.12, 33, 100, 100, 42.69, 33.7, 100, 100, 42.52, 33.54, 100, 100, 42.08, 34.12, 100, 100, 42.76, 33.32, 100, 100, 42.32, 33.9, 100, 89.68, 43.07, 33, 100, 100, 42.5, 33.8, 100, 100, 43.31, 32.75, 100, 100, 42.75, 33.61, 100, 100, 43.37, 32.81, 100, 100, 42.76, 33.52, 100, 100, 43.48, 32.82, 100, 100, 42.59, 33.76, 100, 100, 43.28, 32.94, 100, 100, 42.48, 33.98, 100, 100, 42.99, 33.28, 100, 100, 42.2, 34.23, 100, 100, 42.74, 33.73, 100, 100, 42.4, 34.25, 100, 100, 42.16, 34.46, 100, 100, 42.28, 34.2, 100, 100, 41.64, 34.31, 100, 100, 42.39], dtype=object) - instruments()<U3'msi'

array('msi', dtype='<U3') - data_coverage(time)object33.92 None 100 ... 100 100 42.39

array([33.92, None, 100, None, None, 93.58, None, None, None, None, None, None, None, 100, None, None, None, 62.04, 100, 43.06, None, None, None, 42.82, None, None, None, None, None, 100, 100, None, 33.57, None, None, None, 33.86, 100, None, 42.65, None, None, None, 100, None, 42.6, None, 33.91, 100, None, 33.93, None, None, None, None, 100, None, None, None, None, None, None, None, None, None, None, None, None, 100, None, None, 100, None, None, None, None, 100, None, None, 100, None, None, None, 33.28, None, 42.87, None, None, 100, None, 32.89, None, 100, 42.79, 33.38, None, 100, None, None, None, None, None, None, None, None, 100, 100, 43.59, None, None, 100, 42.52, None, None, None, None, None, 100, 100, None, 34.05, None, 100, None, None, 100, None, 42.11, None, None, 100, None, None, None, 100, None, None, None, 100, None, None, None, None, 42.9, None, 100, None, 43.41, None, 100, 100, None, None, None, None, None, 32.78, None, None, None, None, 100, 100, None, 32.77, None, 56.06, None, 61.48, 34.48, 32.79, 100, None, 42.14, None, 100, 100, 43.4, 32.8, None, 100, None, None, 100, 100, 43.3, None, None, 100, None, None, None, 100, 43.2, 32.99, None, 100, 42.97, None, None, None, None, 33, 100, None, None, 33.7, None, None, None, 33.54, None, None, 42.08, None, None, None, None, None, None, None, None, None, 100, None, None, 33, None, None, None, None, None, 100, 43.31, 32.75, 100, 100, None, None, 100, None, 43.37, 32.81, 100, None, None, 33.52, None, None, 43.48, None, None, None, None, None, 100, None, 43.28, None, None, None, 42.48, None, 100, None, 42.99, None, None, None, None, None, 100, None, None, None, 100, None, None, None, None, 100, 42.16, None, None, None, None, 34.2, 100, None, None, None, 100, 100, 42.39], dtype=object) - eo:cloud_cover(time)float6454.0 50.23 1.02 ... 87.28 0.5 0.14

array([5.400e+01, 5.023e+01, 1.020e+00, 6.300e-01, 4.964e+01, 3.799e+01, 8.556e+01, 6.023e+01, 7.218e+01, 7.082e+01, 1.238e+01, 4.059e+01, 9.640e+00, 2.119e+01, 9.160e+00, 6.300e-01, 9.943e+01, 8.605e+01, 3.190e+00, 4.610e+00, 6.495e+01, 4.143e+01, 9.759e+01, 9.709e+01, 2.748e+01, 1.838e+01, 4.461e+01, 7.964e+01, 7.961e+01, 8.438e+01, 8.183e+01, 6.190e+01, 9.997e+01, 8.547e+01, 7.374e+01, 7.816e+01, 4.795e+01, 5.495e+01, 6.851e+01, 4.454e+01, 8.652e+01, 9.921e+01, 1.500e+00, 4.200e-01, 9.840e+00, 2.780e+01, 6.270e+01, 7.980e+01, 3.455e+01, 5.000e-01, 9.866e+01, 9.889e+01, 8.721e+01, 9.585e+01, 9.440e+01, 6.393e+01, 1.714e+01, 5.600e+00, 5.400e-01, 1.073e+01, 2.432e+01, 4.111e+01, 8.991e+01, 7.825e+01, 2.992e+01, 2.422e+01, 7.790e+00, 1.748e+01, 6.358e+01, 3.411e+01, 9.908e+01, 9.822e+01, 6.860e+00, 4.411e+01, 0.000e+00, 3.400e-01, 8.385e+01, 9.805e+01, 9.108e+01, 8.853e+01, 3.250e+00, 1.660e+00, 4.421e+01, 7.066e+01, 4.200e-01, 0.000e+00, 9.248e+01, 8.011e+01, 1.728e+01, 3.400e+00, 3.462e+01, 2.742e+01, 7.509e+01, 9.326e+01, 1.730e+00, 1.970e+00, 3.010e+00, 4.850e+00, 0.000e+00, 1.000e+02, 0.000e+00, 8.715e+01, 9.789e+01, 8.623e+01, 2.513e+01, 2.267e+01, 3.322e+01, 1.560e+00, 1.377e+01, 2.993e+01, 9.998e+01, 1.000e+02, 1.848e+01, 3.741e+01, 5.673e+01, 2.389e+01, 8.900e-01, 2.090e+01, 4.243e+01, 3.122e+01, ... 6.430e+00, 7.020e+00, 3.780e+00, 2.551e+01, 7.731e+01, 8.661e+01, 6.460e+00, 5.000e-02, 1.200e-01, 7.700e-01, 1.210e+01, 2.560e+00, 1.011e+01, 2.008e+01, 2.700e-01, 1.720e+00, 1.000e-02, 0.000e+00, 5.510e+00, 3.410e+00, 3.900e-01, 5.000e-01, 1.387e+01, 6.990e+00, 2.460e+00, 4.100e-01, 6.530e+00, 2.200e-01, 7.600e-01, 1.140e+00, 1.358e+01, 1.415e+01, 8.808e+01, 9.219e+01, 8.107e+01, 7.202e+01, 1.016e+01, 1.085e+01, 9.900e-01, 9.500e-01, 9.271e+01, 5.895e+01, 7.000e-02, 3.280e+00, 2.600e-01, 8.100e-01, 7.185e+01, 8.810e+01, 9.908e+01, 9.192e+01, 2.000e-01, 0.000e+00, 0.000e+00, 0.000e+00, 9.509e+01, 9.937e+01, 9.000e-02, 8.000e-02, 2.510e+00, 1.740e+00, 6.130e+00, 1.490e+00, 3.070e+00, 4.000e-01, 6.590e+01, 2.995e+01, 4.750e+00, 2.640e+00, 2.040e+00, 1.610e+00, 6.183e+01, 8.935e+01, 9.945e+01, 9.837e+01, 3.400e-01, 0.000e+00, 9.150e+00, 1.070e+01, 9.658e+01, 9.443e+01, 3.450e+00, 7.400e-01, 9.978e+01, 9.957e+01, 1.980e+01, 1.946e+01, 6.869e+01, 3.525e+01, 2.010e+00, 2.700e+00, 3.054e+01, 2.770e+00, 1.000e+02, 9.873e+01, 4.331e+01, 1.453e+01, 4.400e-01, 2.000e-02, 3.535e+01, 4.256e+01, 1.000e+02, 9.997e+01, 2.140e+00, 2.190e+00, 4.130e+01, 3.285e+01, 1.745e+01, 2.271e+01, 4.430e+01, 5.711e+01, 2.322e+01, 7.350e+00, 9.381e+01, 9.724e+01, 2.813e+01, 1.545e+01, 8.980e+01, 8.728e+01, 5.000e-01, 1.400e-01]) - sentinel:valid_cloud_cover(time)objectNone None None ... True True True

array([None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, True, False, True, False, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True, True], dtype=object) - gsd()int6410

array(10)

- platform(time)objectNone ... 'sentinel-2b'

array([None, 'sentinel-2a', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2b', 'sentinel-2b', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2b', ... 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2b', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2a', 'sentinel-2b', 'sentinel-2b'], dtype=object) - sentinel:utm_zone()int6413

array(13)

- sentinel:grid_square(time)<U2'DV' 'CV' 'DV' ... 'CV' 'DV' 'CV'

array(['DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'CV', 'DV', 'DV', 'CV', 'DV', 'CV', 'CV', 'DV', 'DV', 'CV', 'DV', 'CV', 'CV', 'DV', 'DV', 'CV', 'DV', 'CV', 'CV', 'DV', 'CV', 'DV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'CV', 'DV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'CV', 'DV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'DV', 'CV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV', 'DV', 'CV'], dtype='<U2') - sentinel:product_id(time)<U60'S2A_MSIL2A_20190102T175731_N021...

array(['S2A_MSIL2A_20190102T175731_N0211_R141_T13SDV_20190102T203047', 'S2A_MSIL2A_20190102T175731_N0211_R141_T13SCV_20190102T203047', 'S2B_MSIL2A_20190104T174729_N0211_R098_T13SDV_20190104T214532', 'S2B_MSIL2A_20190104T174729_N0211_R098_T13SCV_20190104T214532', 'S2B_MSIL2A_20190107T175729_N0211_R141_T13SDV_20190107T201819', 'S2B_MSIL2A_20190107T175729_N0211_R141_T13SCV_20190107T201819', 'S2A_MSIL2A_20190109T174711_N0211_R098_T13SDV_20190109T220010', 'S2A_MSIL2A_20190109T174711_N0211_R098_T13SCV_20190109T220010', 'S2A_MSIL2A_20190112T175711_N0211_R141_T13SDV_20190112T202931', 'S2A_MSIL2A_20190112T175711_N0211_R141_T13SCV_20190112T202931', 'S2B_MSIL2A_20190114T174659_N0211_R098_T13SDV_20190114T202529', 'S2B_MSIL2A_20190114T174659_N0211_R098_T13SCV_20190114T202529', 'S2B_MSIL2A_20190117T175659_N0211_R141_T13SDV_20190117T214954', 'S2B_MSIL2A_20190117T175659_N0211_R141_T13SCV_20190117T214954', 'S2A_MSIL2A_20190119T174641_N0211_R098_T13SDV_20190119T220705', 'S2A_MSIL2A_20190119T174641_N0211_R098_T13SCV_20190119T220705', 'S2A_MSIL2A_20190122T175641_N0211_R141_T13SDV_20190122T202205', 'S2A_MSIL2A_20190122T175641_N0211_R141_T13SCV_20190122T202205', 'S2B_MSIL2A_20190124T174629_N0211_R098_T13SDV_20190124T214640', 'S2B_MSIL2A_20190124T174629_N0211_R098_T13SCV_20190124T214640', ... 'S2A_MSIL2A_20191208T175731_N0213_R141_T13SCV_20191208T202829', 'S2B_MSIL2A_20191210T174729_N0213_R098_T13SDV_20191210T202342', 'S2B_MSIL2A_20191210T174729_N0213_R098_T13SCV_20191210T202342', 'S2B_MSIL2A_20191213T175729_N0213_R141_T13SDV_20191213T202244', 'S2B_MSIL2A_20191213T175729_N0213_R141_T13SCV_20191213T202244', 'S2A_MSIL2A_20191215T174731_N0213_R098_T13SDV_20191215T220325', 'S2A_MSIL2A_20191215T174731_N0213_R098_T13SCV_20191215T220325', 'S2A_MSIL2A_20191218T175741_N0213_R141_T13SDV_20191218T202954', 'S2A_MSIL2A_20191218T175741_N0213_R141_T13SCV_20191218T202954', 'S2B_MSIL2A_20191220T174739_N0213_R098_T13SDV_20191220T202123', 'S2B_MSIL2A_20191220T174739_N0213_R098_T13SCV_20191220T202123', 'S2B_MSIL2A_20191223T175739_N0213_R141_T13SDV_20191223T201454', 'S2B_MSIL2A_20191223T175739_N0213_R141_T13SCV_20191223T201454', 'S2A_MSIL2A_20191225T174741_N0213_R098_T13SDV_20191225T220211', 'S2A_MSIL2A_20191225T174741_N0213_R098_T13SCV_20191225T220211', 'S2A_MSIL2A_20191228T175741_N0213_R141_T13SDV_20191228T202821', 'S2A_MSIL2A_20191228T175741_N0213_R141_T13SCV_20191228T202821', 'S2B_MSIL2A_20191230T174729_N0213_R098_T13SDV_20191230T202409', 'S2B_MSIL2A_20191230T174729_N0213_R098_T13SCV_20191230T202409'], dtype='<U60') - proj:epsg()int6432613

array(32613)

- view:off_nadir()int640

array(0)

- sentinel:latitude_band()<U1'S'

array('S', dtype='<U1') - created(time)<U24'2020-09-23T19:20:47.956Z' ... '...

array(['2020-09-23T19:20:47.956Z', '2020-08-29T22:49:58.271Z', '2020-09-30T04:29:25.703Z', '2020-08-28T15:51:16.696Z', '2020-08-28T15:47:21.917Z', '2020-09-30T00:57:19.775Z', '2020-08-28T22:41:28.482Z', '2020-08-28T15:54:37.441Z', '2020-08-28T22:25:47.607Z', '2020-08-28T22:20:34.703Z', '2020-08-28T22:41:33.408Z', '2020-08-28T16:02:39.206Z', '2020-08-29T01:39:49.008Z', '2020-09-21T03:41:08.809Z', '2020-08-28T22:36:44.237Z', '2020-08-28T15:46:04.505Z', '2020-08-28T18:14:43.585Z', '2020-09-20T06:17:23.277Z', '2020-09-23T18:01:56.477Z', '2020-09-21T02:53:08.266Z', '2020-08-28T22:20:58.662Z', '2020-08-28T22:27:49.824Z', '2020-08-28T22:37:36.088Z', '2020-09-23T21:59:09.711Z', '2020-08-29T22:44:09.569Z', '2020-08-28T22:22:28.814Z', '2020-08-29T22:52:33.361Z', '2020-08-28T16:01:00.663Z', '2020-08-28T22:33:52.096Z', '2020-09-25T06:56:05.174Z', '2020-09-21T03:43:28.681Z', '2020-08-28T15:52:25.407Z', '2020-09-05T19:32:20.939Z', '2020-08-28T22:37:56.081Z', '2020-08-28T22:41:36.103Z', '2020-08-28T17:59:47.438Z', '2020-09-23T23:14:40.777Z', '2020-09-30T04:07:01.355Z', '2020-08-28T22:28:24.927Z', '2020-09-05T21:07:20.323Z', ... '2020-08-23T09:32:30.129Z', '2020-09-23T08:31:47.754Z', '2020-08-23T09:56:16.429Z', '2020-08-23T09:44:55.984Z', '2020-08-23T10:19:11.765Z', '2020-09-25T01:34:43.155Z', '2020-08-23T09:51:19.874Z', '2020-09-05T08:43:42.293Z', '2020-08-23T10:07:14.122Z', '2020-09-05T07:18:33.301Z', '2020-08-23T09:42:04.153Z', '2020-08-23T10:10:08.896Z', '2020-08-23T09:37:33.712Z', '2020-08-23T10:15:36.482Z', '2020-08-23T11:54:03.391Z', '2020-09-20T09:49:13.884Z', '2020-09-30T02:11:04.018Z', '2020-08-23T09:26:36.711Z', '2020-08-23T16:14:41.389Z', '2020-09-21T08:04:29.332Z', '2020-08-24T01:51:23.045Z', '2020-08-23T09:41:30.566Z', '2020-08-23T09:46:13.378Z', '2020-08-23T10:12:12.715Z', '2020-09-23T21:57:49.930Z', '2020-09-23T21:00:27.707Z', '2020-08-23T09:47:51.325Z', '2020-08-23T09:40:18.987Z', '2020-08-23T09:37:22.832Z', '2020-08-23T16:14:07.097Z', '2020-09-30T03:57:53.486Z', '2020-09-20T06:48:26.400Z', '2020-08-23T10:09:49.754Z', '2020-08-23T09:41:55.824Z', '2020-08-23T09:45:54.571Z', '2020-09-21T12:58:16.531Z', '2020-09-23T22:02:28.983Z', '2020-09-23T20:40:56.213Z'], dtype='<U24') - title(band)objectNone ... 'Scene Classification M...

array([None, 'True color image', 'Band 1 (coastal)', 'Band 2 (blue)', 'Band 3 (green)', 'Band 4 (red)', 'Band 5', 'Band 6', 'Band 7', 'Band 8 (nir)', 'Band 8A', 'Band 9', 'Band 11 (swir16)', 'Band 12 (swir22)', 'Aerosol Optical Thickness (AOT)', 'Water Vapour (WVP)', 'Scene Classification Map (SCL)'], dtype=object)

- spec :

- RasterSpec(epsg=32613, bounds=(391300, 3939700, 446000, 3984700), resolutions_xy=(100, 100))

- crs :

- epsg:32613

- transform :

- | 100.00, 0.00, 391300.00| | 0.00,-100.00, 3984700.00| | 0.00, 0.00, 1.00|

- resolution :

- 100

For comparison, this is how much data we’d be processing if we’d used full 10m resolution:

[6]:

import dask

dask.utils.format_bytes(stackstac.stack(items).nbytes)

[6]:

'9.21 TB'

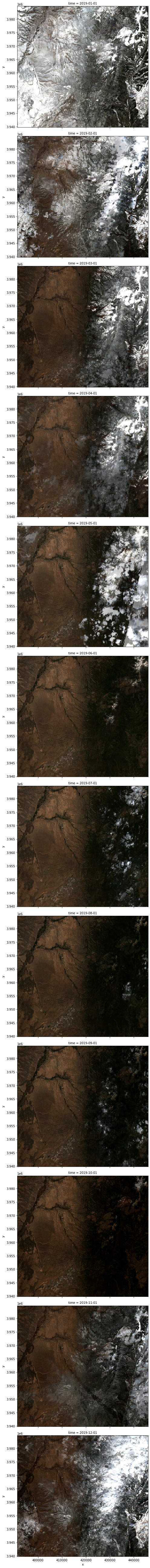

Prepare monthly RGB composites¶

Now, use standard xarray methods to select out the red, green, and blue bands, then make monthly median composites.

[7]:

rgb = stack.sel(band=["B04", "B03", "B02"])

monthly_rgb = rgb.resample(time="MS").median(dim="time")

monthly_rgb

[7]:

<xarray.DataArray 'stackstac-ae169f4201b4c70e2f19d70d036371ff' (time: 12, band: 3, y: 450, x: 547)>

dask.array<stack, shape=(12, 3, 450, 547), dtype=float64, chunksize=(1, 2, 450, 547), chunktype=numpy.ndarray>

Coordinates:

* time (time) datetime64[ns] 2019-01-01 ... 2019-12-01

* band (band) <U8 'B04' 'B03' 'B02'

* x (x) float64 3.914e+05 3.914e+05 ... 4.46e+05

* y (y) float64 3.985e+06 3.985e+06 ... 3.94e+06

constellation <U10 'sentinel-2'

instruments <U3 'msi'

gsd int64 10

sentinel:utm_zone int64 13

proj:epsg int64 32613

view:off_nadir int64 0

sentinel:latitude_band <U1 'S'

title (band) object 'Band 4 (red)' ... 'Band 2 (blue)'- time: 12

- band: 3

- y: 450

- x: 547

- dask.array<chunksize=(1, 2, 450, 547), meta=np.ndarray>

Array Chunk Bytes 70.89 MB 3.94 MB Shape (12, 3, 450, 547) (1, 2, 450, 547) Count 16833 Tasks 24 Chunks Type float64 numpy.ndarray 12 1 547 450 3 - time(time)datetime64[ns]2019-01-01 ... 2019-12-01

array(['2019-01-01T00:00:00.000000000', '2019-02-01T00:00:00.000000000', '2019-03-01T00:00:00.000000000', '2019-04-01T00:00:00.000000000', '2019-05-01T00:00:00.000000000', '2019-06-01T00:00:00.000000000', '2019-07-01T00:00:00.000000000', '2019-08-01T00:00:00.000000000', '2019-09-01T00:00:00.000000000', '2019-10-01T00:00:00.000000000', '2019-11-01T00:00:00.000000000', '2019-12-01T00:00:00.000000000'], dtype='datetime64[ns]') - band(band)<U8'B04' 'B03' 'B02'

array(['B04', 'B03', 'B02'], dtype='<U8')

- x(x)float643.914e+05 3.914e+05 ... 4.46e+05

array([391350., 391450., 391550., ..., 445750., 445850., 445950.])

- y(y)float643.985e+06 3.985e+06 ... 3.94e+06

array([3984750., 3984650., 3984550., ..., 3940050., 3939950., 3939850.])

- constellation()<U10'sentinel-2'

array('sentinel-2', dtype='<U10') - instruments()<U3'msi'

array('msi', dtype='<U3') - gsd()int6410

array(10)

- sentinel:utm_zone()int6413

array(13)

- proj:epsg()int6432613

array(32613)

- view:off_nadir()int640

array(0)

- sentinel:latitude_band()<U1'S'

array('S', dtype='<U1') - title(band)object'Band 4 (red)' ... 'Band 2 (blue)'

array(['Band 4 (red)', 'Band 3 (green)', 'Band 2 (blue)'], dtype=object)

Compute in parallel on the cluster¶

[8]:

%time rgb_ = monthly_rgb.compute()

CPU times: user 1.29 s, sys: 273 ms, total: 1.56 s

Wall time: 2min 28s

Using 4 Coiled workers (4 CPU, 16 GiB memory each), we processed the ~10 GB of data into median composites in a couple minutes.

Go to the dashboard at https://cloud.coiled.io to watch the computation in progress.

[9]:

rgb_.plot.imshow(row="time", rgb="band", robust=True, size=6)

[9]:

<xarray.plot.facetgrid.FacetGrid at 0x12be930d0>

According to the Coiled dashboard, this cost about 74 cents. Was this picture worth 74 cents to you?